Real-Time Graphic Notation Based on Gesture Data

Seis Canciones for voice and live electronics

Arcos for cello and augmented violin bow

Arcos – Erica Wise, cello // Gil Dori, augmented violin bow

The graphic notation for this work is generated by extracting bowing gestures data of two instruments: cello and a self-built augmented violin bow. This means that the graphics do not merely symbolize gestures, but show the actual gestures.

Published paper about Arcos and its composition process:

Gil Dori, Using Gesture Data to Generate Real-Time Graphic Notation: a Case Study, International Conference on Technologies for Music Notation and Representation, 2020.

I used a Myo armband to record cello bowing data, and the augmented bow’s own position tracking module to record its gesture data. After processing the data, I used it to recreate the gestures on the screen, as a form of real-time graphic notation based on imitation. This notational approach allows for an immediate, intuitive interpretation on the spot, a crucial feature for the practice of real-time scores.

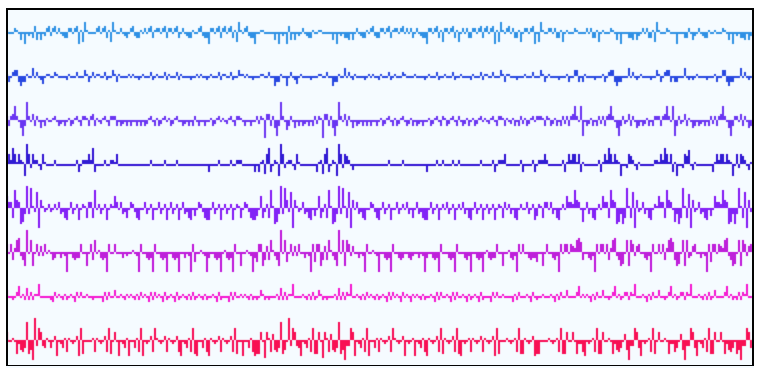

Myo EMG data of heavy and light bow pressure

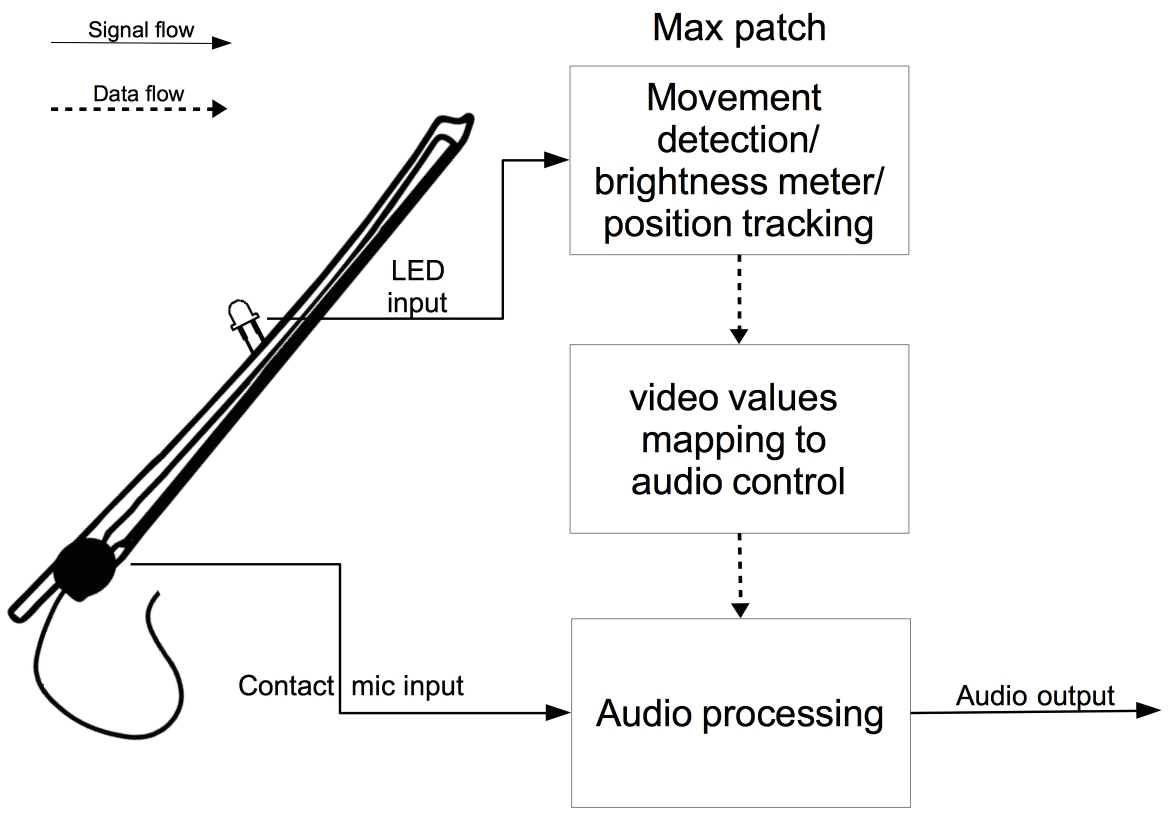

The augmented violin bow is a hybrid instrument, that consists of a tangible part (a violin bow) and a digital part (a Max patch). Its purpose is to afford a physically engaging electronic performance, focused on bowing gestures. I wanted to express the bowing idea not only in terms of movement, but also in terms of sound. Therefore, the sound of the instrument is the sound of the bow itself, amplified and processed (according to movement and position data).

Augmented bow diagram

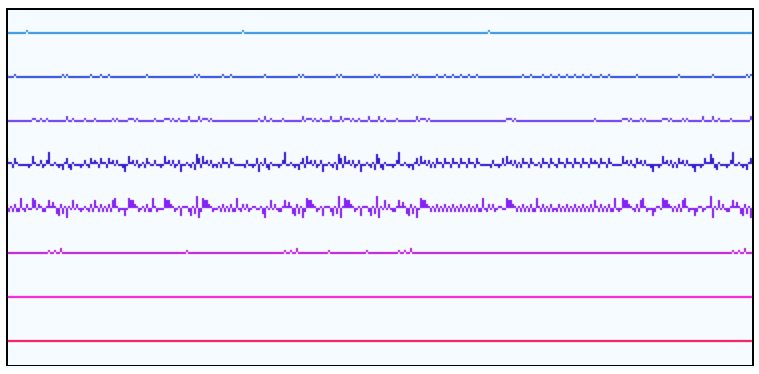

Augmented bow motion tracking as it appears in the Max patch

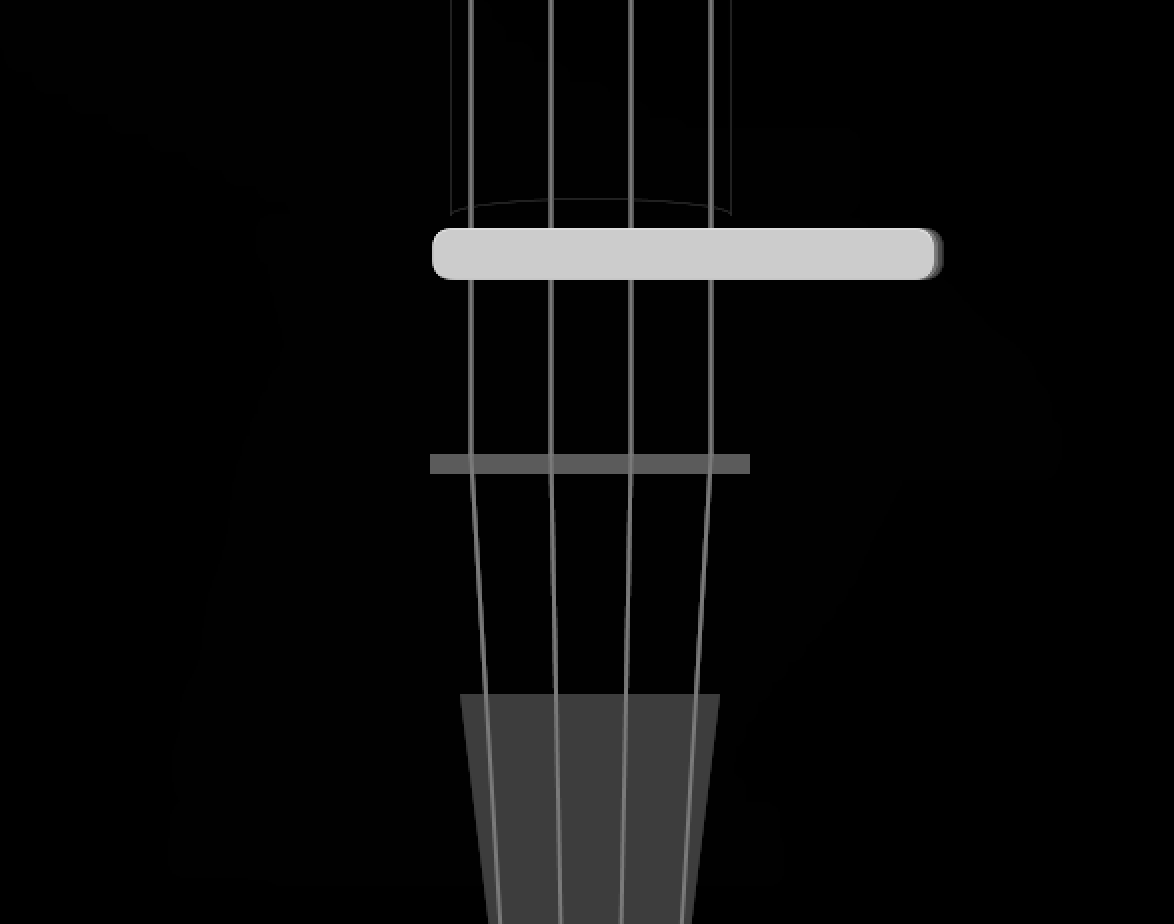

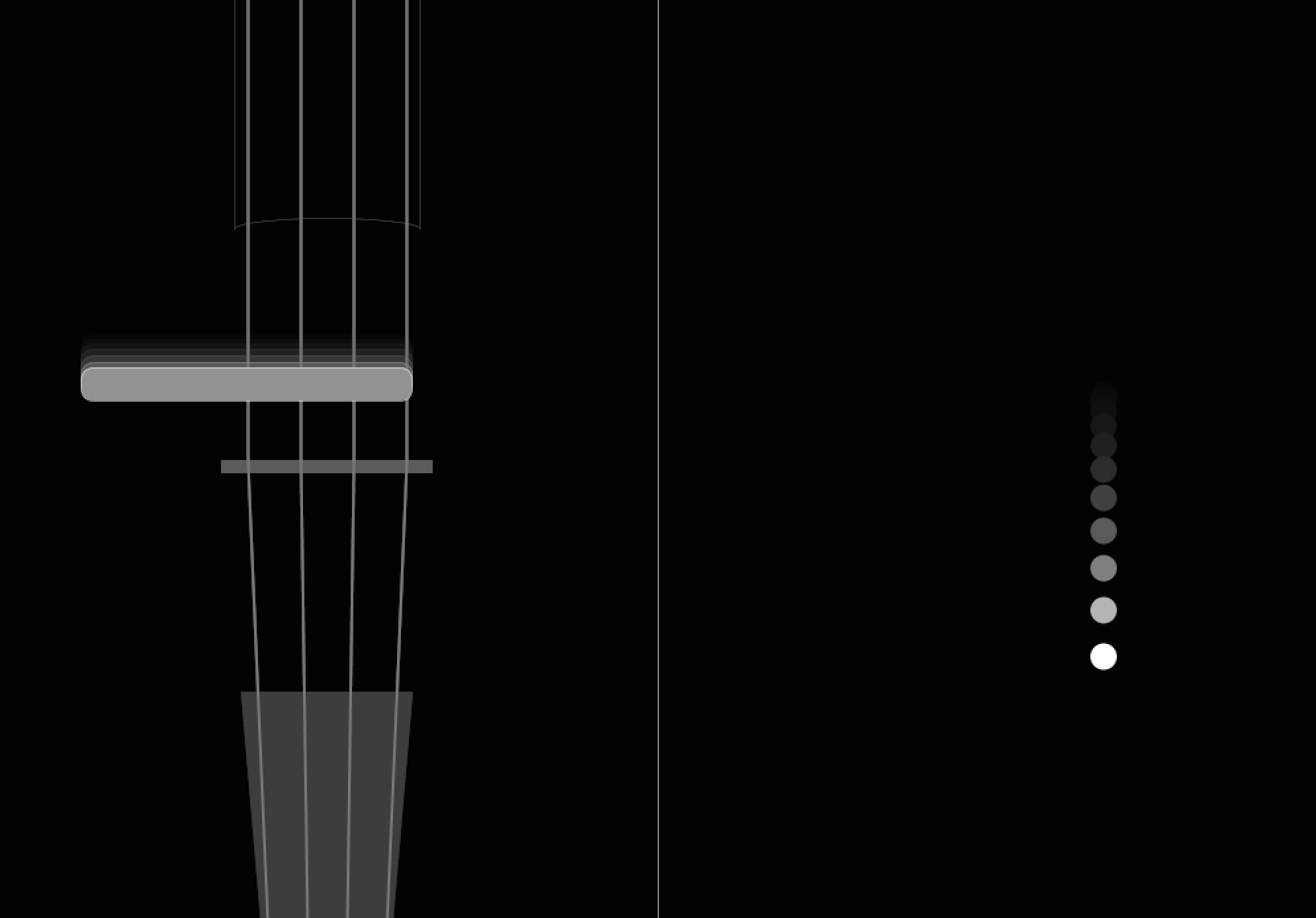

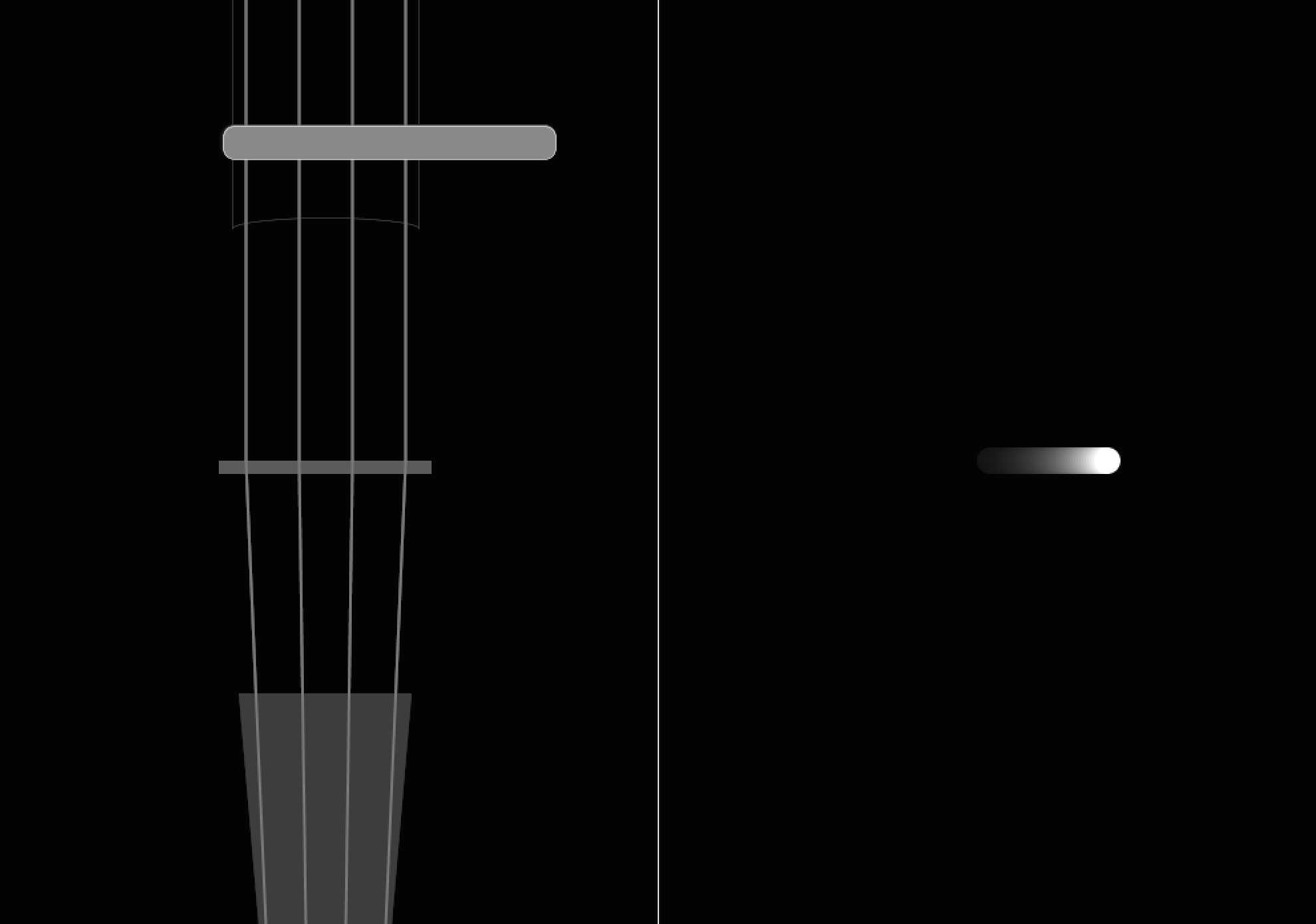

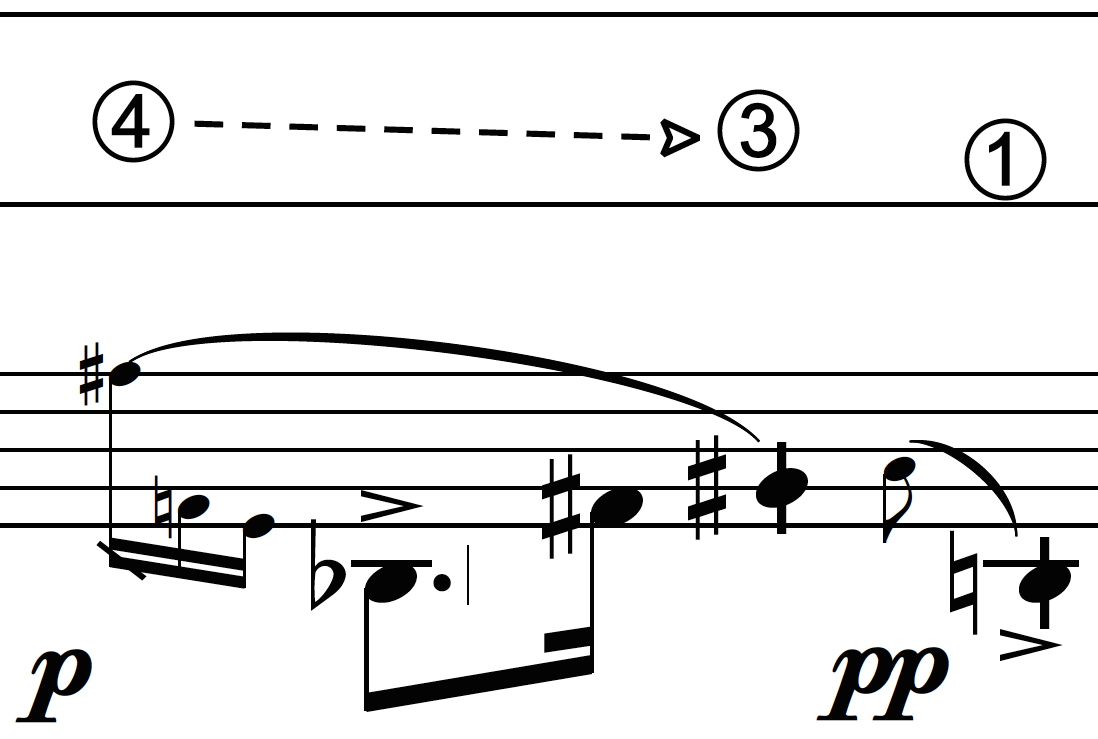

The graphic notation, in the form of gestures that the performers imitate, looks differently for each instrument. The cello part shows the front of the instrument, including the lower part of the fingerboard and the upper part of the tailpiece. A virtual bow moves on the screen, driven by the gesture data, instructing the cellist what to play. The augmented bow part shows a white dot, representing the LED diode mounted on the bow. It shows the bow’s movements as they appear in the motion tracking window in the Max patch, instructing the actions to the performer.

Score examples

Arcos was composed during my residency at Phonos Foundation, Barcelona (2019-2020), and was supported by the Fund for Independent Creators. Capturing motion data, analyzing it, and using it in the context of the graphic score was done with the help of David Cabrera and Rafael Ramirez (Music and Machine Learning Lab at Music Technology Group, Universitat Pompeu Fabra). The cello bowing gestures data was recorded with cellist Leo Morello. The graphic designer Nir Bitton advised with the score design.

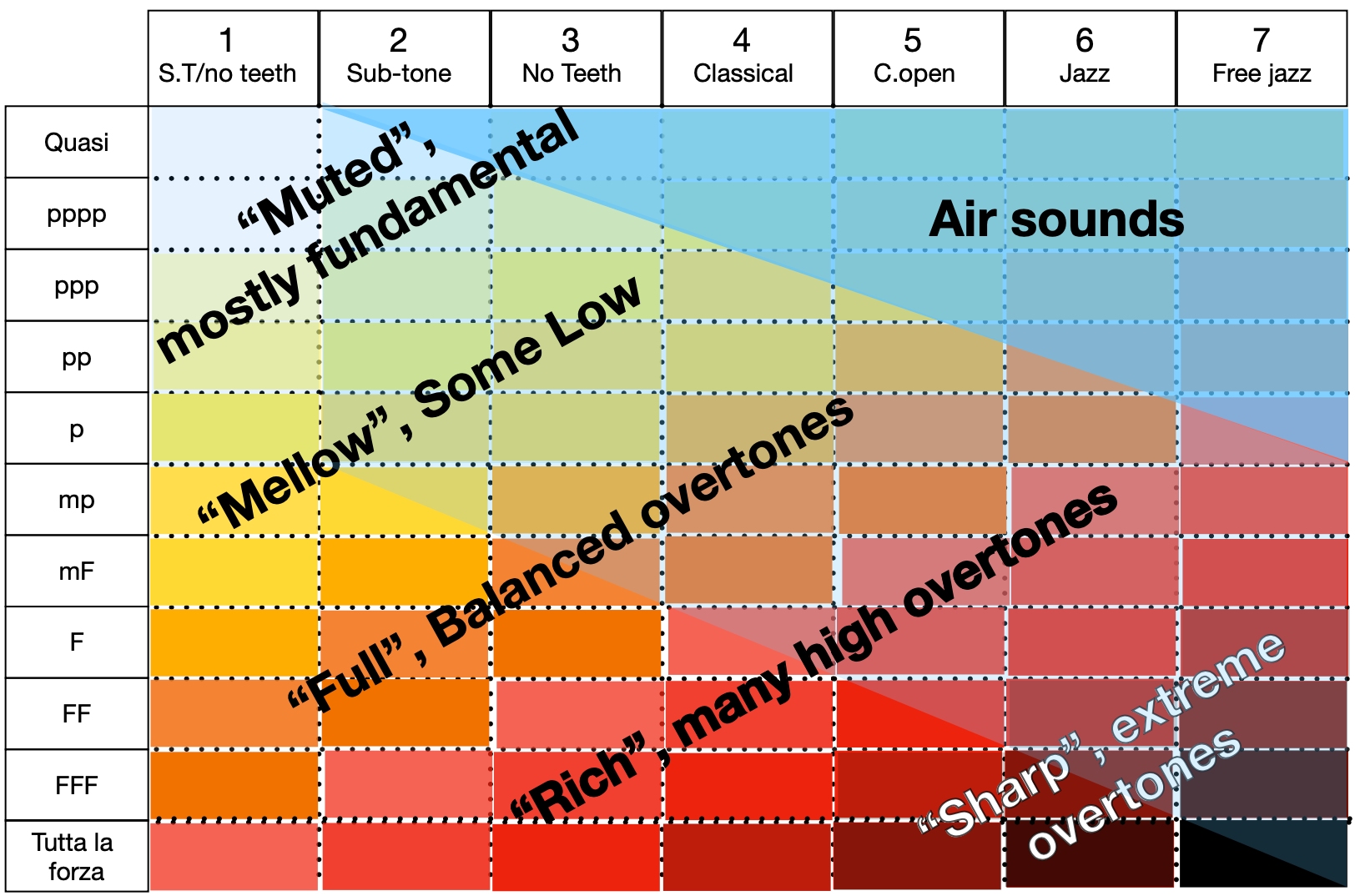

Timbre-Centric Composition Method for Saxophone

I have been working together with saxophonist Jonathan Chazan on an artistic research project that explores a new way of composing for saxophone, based on timbral qualities. The overall, future goal of this project is to develop a method for composers and performers to indicate specific timbres and interpret them precisely, aided by technology. Eventually, we want to expand it into a quantitative notational language that allows to express timbres efficiently and unambiguously.

Our special interest in developing this method comes from the unique mental state a timbral-centric system creates in performing and listening to music, since it calls to pay attention to timbres in a more profound way. And, because it is not necessarily intuitive, it brings the composer and performer to novel, interesting, and unpredicted musical places.

More information about Jonathan Chazan’s SpectraSax Project here.

hay que respirar

hay que respirar, for saxophone and live electronics, focuses on airy sounds and their relation to pitched sounds. In our prior joint piece, “Siete Dolores,” we discovered air-sound relations while exploring sounds produced by different mouth positions and that different positions played with different amounts of air and embouchure pressure create interesting and distinct airy-sound pallets with varying amounts of airy-sound to clean-sound relations. Respirar is built around these sound objects while incorporating better control of the newly defined sound pallet.

The piece opens and ends with big breathing gestures, which are recorded, looped, and layered to create a more complex texture. The saxophone is also processed in real time by a spectral filter.

hay que respirar (live recording) – Jonathan Chazan, saxophone

Theoretical Model of air-sound-for hay que respirar

More information about hay que respirar on Jonathan Chazan’s website

Siete Dolores

Siete Dolores, is a 28-minute composition for saxophone and live electronics (audio and video/lights). The piece was designed to showcase this approach to composing with timbres, similarly to an etude that focuses solely on that timbral technique. The timbre analysis and identification in the piece is done with Sonic Print by Jean-Francois Charles, and with Zsa.descriptors by Mikhail Malt.

Published paper about Sonic Print and Siete Dolores:

Jean-Francois Charles, Gil Dori, and Joseph Norman, Sonic Print: Timbre Classification with Live Training for Musical Application, Joint Conference on AI Music Creativity, 2020.

Siete Dolores (excerpts) – Jonathan Chazan, saxophone

Our goals for the next stages of the project are: to improve our spectral analysis and timbre recognition system; to gain a better understanding of the perception of different saxophone timbres; to compose a new, shorter piece; and to present our work in academic settings.

More information about Siete Dolores on Jonathan Chazan’s website